HW3

Due on Nov 30 (20 points)

(5 points) Consider a binary channel with a cross over probability of 0.15 and an erasure probability of 0.1, what is the capacity of the channel? In other words, when a bit is sent through the channel, there is a probability of 0.15 that the bit is flipped, a probability of 0.1 that the bit got erased (the decoder can't even tell if the received bit is 0 or 1), and a probability of 0.75 that the bit is transmitted correctly.

(5 points) Consider a channel composed of two parallel Gaussian channels with noise powers \({N_1}=1\) and \({N_2}=5\). Given a total power \(P\), you may assign power \(P_1\) to channel 1 and power \(P_2\) to channel 2 such that \(P_1+P_2=P\). By trading off the power between the two channels, compute the (maximum) overall capacity: \(\frac{1}{2} \log(1+\frac{P_1}{N_1}) + \frac{1}{2} \log(1+\frac{P_2}{N_2}\)) for

\(P = 13\)

\(P = 3\)

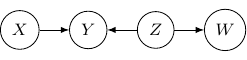

(10 points) For the simple Bayesian network below,

Identify a pair of independent variables

Identify a pair of conditionally independent variables given some variables

Convert the Bayesian network to an undirected graph

|

Extra-credits:

(5 points) For the above question (Q.2), find a general expression of the overall capacity for any \(P\).

Consider the problem of estimating the variance of a zero-mean Gaussian random variable \(X\sim \mathcal{N}(0,\nu)\) from \(N\) samples, where \(\nu\) is the variance of \(X\).

(10 points) Find the Cramar-Rao Lower Bound (CRLB) for the unbiased estimators.

(10 points) Propose an unbiased estimator and prove its optimality by showing that the variance of the estimate achieves the CRLB.

(15 points) Try to repeat Q1 in the midterm using Lea.